Installation

The StarShip CodeReviewer, a crucial import module of the StarShip suite, comes pre-integrated and is installed along with the primary StarShip package.

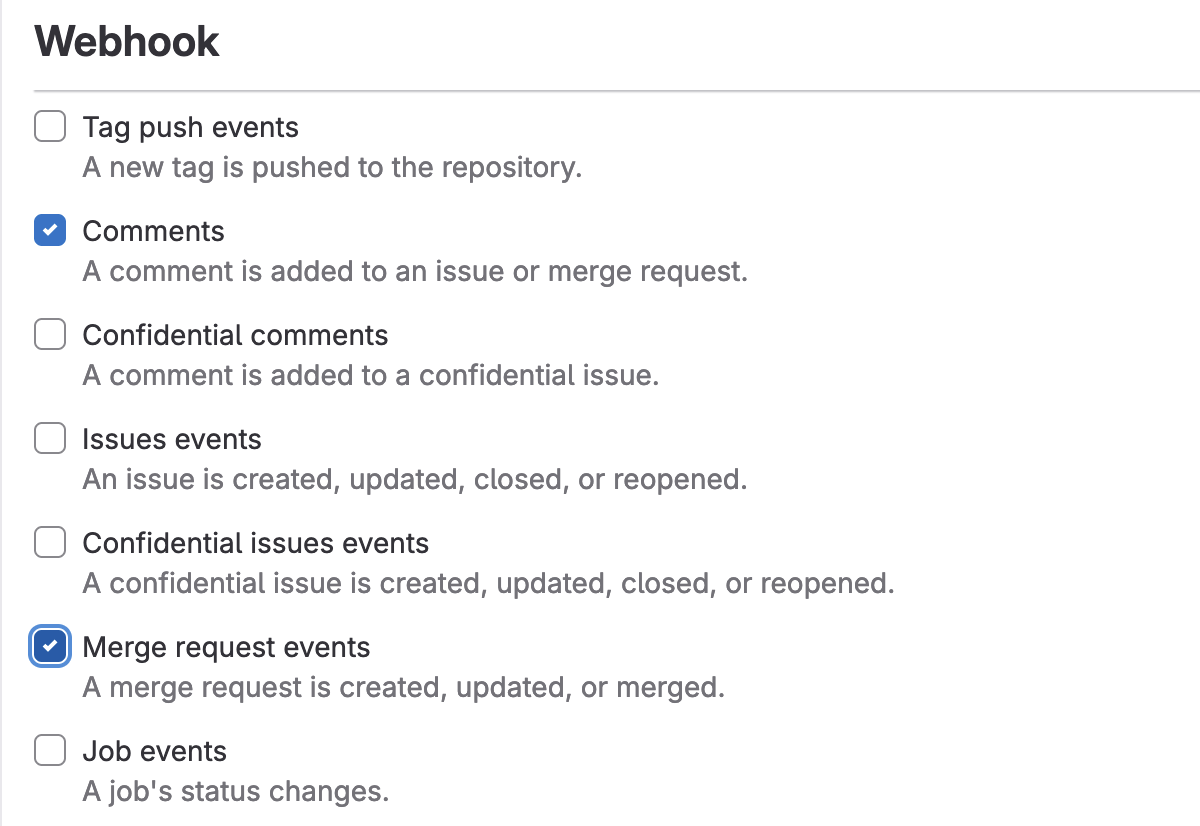

Important Configuration Note: When configuring the GitLab webhook for optimal functionality of the StarShip CodeReviewer, it's essential to specify two critical Triggers:

Merge Requests Events: Activating this trigger ensures that creation events related to merge requests, are promptly communicated to the

StarShip CodeReviewer. This enables the module to perform timely reviews and evaluations of the code changes proposed.Comments: This trigger allows the

StarShip CodeReviewerto be notified of and act upon the comments (start with @codegpt) made on the merge requests. It plays a vital role in facilitating interactive review processes. See more details in Tigger CodeReviewer Guide.

By configuring these triggers, you ensure a seamless integration and leverage the full capabilities of the StarShip CodeReviewer for your development workflow, enhancing the efficiency and quality of your code reviews.

Environment Variable for LLM

OpenAI GPT4 Assistant API

By default the StarShip CodeReview using Azure OpenAI GPT4 Assistant API, with below default environment variables:

USE_OPENAI_ASSISTANTS_API=true

LLM_PROVIDER="azure"

LLM_MODEL="azure/csg-gpt4"

OpenAI GPT4 Chat Completion API

USE_OPENAI_ASSISTANTS_API=false

LLM_PROVIDER="azure"

LLM_MODEL="azure/csg-gpt4"

For Azure OpenAI variables, you can set as following example:

AZURE_API_KEY="xxxxxxxxxxxxxx"

AZURE_API_BASE="https://opencsg-us.openai.azure.com"

AZURE_API_VERSION="2024-02-15-preview"

Locally On-Promise LLM

For locally on-promise LLM, set below environment varaibles (for example):

LLM_PROVIDER="custom"

LLM_MODEL="openai//data/models/deepseek-coder-33b-instruct"

CUSTOM_LLM_API_BASE="http://47.93.24.244:30900/v1"